Abstract

One of the biggest challenges faced by social media networks today is content moderation. This becomes a problem when potentially offensive content demonstrating violence, portraying sexually explicit images, advocating the use of weapons, etc are posted. We would like to create a model that can auto-moderate such content.

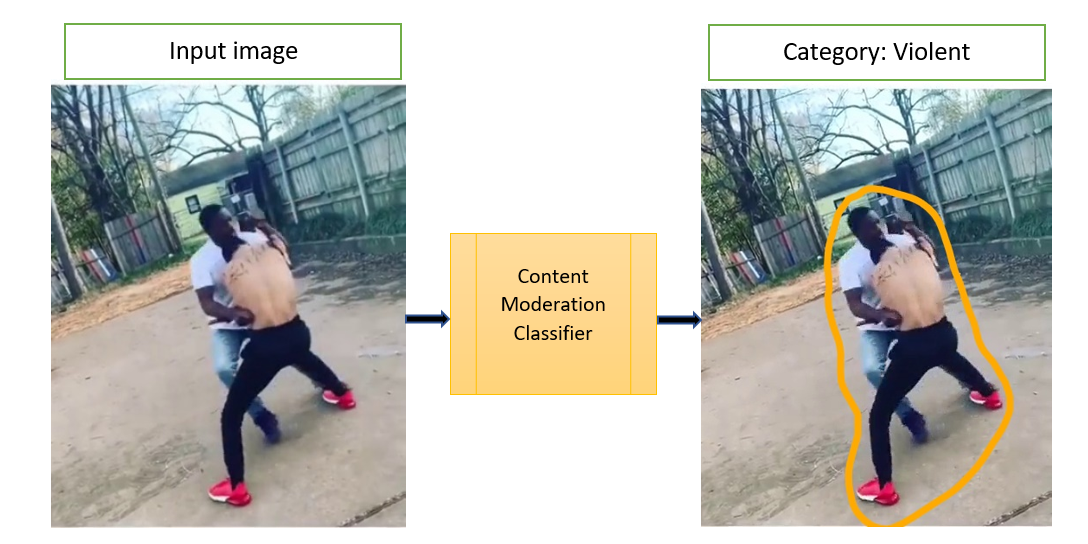

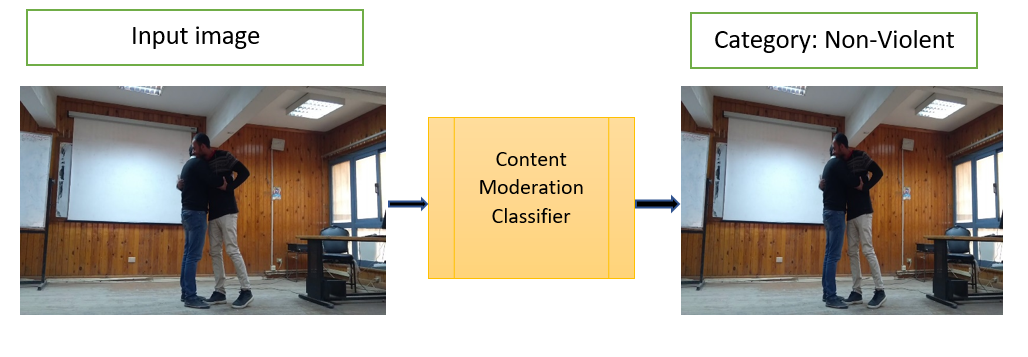

The main objective of our project is to robustly classify and flag images as offensive or non-offensive. These images can be offensive due to the presence of violence, blood, weapons, sexual or other graphic content. Our model should be able to discriminate between such inappropriate images and other images. The classification decision made by the system should also be explainable to ensure that the system does not harbour any biases such as flagging all images with humans as offensive or tagging all images with red liquids as offensive.

Our initial set of experiments was to build a classifier that could discriminate between offensive and inoffensive images. Using transfer learning with convolutional neural networks, we were able to achieve a 89% accuracy in our task.

Introduction

Since the advent of social media, text, images and videos uploaded by users become visible and accessible to millions of other users. This becomes a problem when potentially offensive content demonstrating violence, portraying sexually explicit images, advocating the use of weapons, etc are posted. While there are human content reviewers, the volume of data produced automatically makes this a problem that needs to be solved at scale and is thus a good candidate to be solved using learning techniques. There are numerous issues with content moderation [2]. One of the main problems encountered with content moderation is that the process is both inconsistent and lacks transparency in terms of why a certain content is flagged. So, any robust automation system should be able to consistently capture the correct contents that need to be flagged and be able to clearly explain the reason for flagging of a certain content. This is important as we would like to be able to scale with ease (automated flagging) and at the same time take care to provide feedback (explainability) to the user uploading the content as well as to potentially any human moderators auditing the moderation results to help prevent cases where images get mislabelled as offensive, to investigate appeals from users, etc. In our system, we will be working with images to classify them as offensive or not while also explaining the reason behind the decision.

Approach

We approach Content Moderation as a binary classification problem with 2 broad categories - offensive images and non-offensive images.

We use Convolutional Neural Networks (CNNs) to perform a supervised binary classification of images into these two categories. Specifically, we perform feature extraction on the images in our dataset using transfer learning from the following pre-trained CNNs to build the binary classifier.

- Alexnet

- Resnet-18

- Inceptionv3

- VGG16

We faced obstacles mainly in terms of the data, there are not many violence datasets available. We overcame this by sampling images from 1000 violent videos collected from Youtube[4] by extracting frames from these videos at a frame rate of 0.5, which is one frame every 0.5 seconds. However, it is worth noting that all frames of a violent video are not necessarily violent. Although this number is small, we expect it to affect our model in some way, and a future plan is to devise a method to overcome this. For example, a thumb rule that could be applied is to drop the first few frames and the last few frames of a violent video, assuming that the major chunk of violence occurs in the central part of a video.

In the second phase, we intend to use Grad-CAM[1], which is a class-discriminative localization technique to generate visual explanations of the salience regions in the classified images that caused the images to be classified as offensive.

In performing the classification and generating visual explanations, we build what would form the basis (a minimum viable product version) of a content moderation system for offensive images. The system could help end-users / human moderators identify images to be flagged as well as ‘see’ what caused the image to be flagged as offensive. In further experiments, we aim to explore how augmenting an image and/or replacing certain parts of the image with specific offensive/non-offensive content changes the behaviour.

In our approach we had to make several design choices and judgement calls which we have addressed as follows :

- Why are we approaching the problem as a classification problem and proposing the use of CNNs? What about object detection or any other techniques?

We expect that there are latent factors involved in identifying an image as potentially offensive. For instance, in the case of differentiating between images portraying violence and other images, we may not always be able to look for objects (such as knives), or something specific like fire/blood, etc in the scene in a manner that is scalable. Classification of the images using a fine-tuned Convolutional Neural Network will help us identify the nuances involved in flagging the images. With the proposed approach, we should be able to understand and see the reasoning behind the same as well. We believe that such a model is more helpful in a real-world setting where there’s usually a combination of human content-reviewers, rule-based systems and ML systems working together. This could help reduce the gap in understanding the reasons behind the machine’s take on a certain piece of content versus that of a human.

- Why didn’t we formulate the problem as classifying videos, when we have a video dataset available?

It is far more common to have offensive images and we can always sample images from videos. Thus, formulating the problem as image classification has a much wider application scope.

- Of the two transfer learning approaches - fine-tuning and fixed feature extraction, why did we go with the latter?

Since our dataset is relatively small and does not have a variety of unique examples, it is not a good idea to fine-tune the ConvNet due to overfitting concerns. We expect higher-level features in the ConvNet to be relevant to this dataset as well. Hence, we are using the pre-trained network as a feature extractor and retrain the final layer for our classification problem. Thus, we derive predictions by updating only the final layer weights.

- Why did we use these 4 models to train the classifiers?

Our intention is to compare the performance metrics of different classifiers and choose the best one going forward. AlexNet, VGG16, ResNet and InceptionV3 are all landmark ConvNet architectures trained on ImageNet and have proven to demonstrate a high accuracy. These networks have been used for other transfer learning tasks and perform well over a wide range of problems.

- Why did we choose this particular dataset?

This dataset contains a balanced mix of both violent and non-graphic videos from Youtube. The real-life violent content comes majorly from street fights. Apart from the violence videos, there are videos containing regular activities like eating and playing football. We intend to extract frames from each video and treat them as separate, labelled images. This wide range of activities in the dataset would help us make our model robust and take care of corner cases and false positives.

Experiments and Results

We trained our dataset using the four different classifiers mentioned above, all of them CNN architectures.

We split the data set into 3 sets - train, validation and test. The classifiers are trained using a transfer learning approach where we extract features from pre-trained convnets and modify the final fully-connected layer to suit our data. The transfer learning is performed on a ConvNet pretrained on ImageNet and backprop is done on only the final, modified layer and the weights from the pretrained layers are kept as is. Thus the rest of the ConvNet as a fixed feature extractor for the new dataset.

Details about the data used: (Images are sampled from the videos from [4] via an offline process)

| Type of Data |

Number of Violent Images | Number of Non-Violent Images | Total Number of Images |

|---|---|---|---|

| Training | 8091 | 9409 | 17500 |

| Validation | 1513 | 1506 | 3019 |

| Test | 1506 | 1501 | 3007 |

As per our dataset, a ‘dumb’ classifier (always ‘yes’ or always ‘no’) or a random classifier(randomly classification) would perform classification with an accuracy of close to 50%.

Algorithms used and their parameters

| Parameter | Value used | Motivation |

|---|---|---|

| num_classes | 2 | The images need to be classified as ‘violent’ or ‘non-violent’. |

| batch_size | 4/8 | Batch-size was 8 for Inception, AlexNet and VGG. ResNet used a batch size of 4. The batch sizes was chosen due to computational limitations of the machines we were using. |

| num_epochs | 15 | We used the pre-trained models as feature extractors and were training the last layer for our classification problem. In this case, 15 epochs was sufficient for the models to learn the problem. We were not noticing any changes beyond the first few epochs, so we capped it at 15. |

| learningRate, momentum | 0.001, 0.9 respectively | The learning rate and momentum were set to these values to increase the probability of not getting stuck at a local optima. |

| Image Transforms | Resized Crop and Horizontal Flip | We did this to augment the dataset to be invariant to scale and rotation changes. This captures the variety/variance of real world scenes that will be encountered by the system during the test phase. |

| Validation | We had a validation dataset (that was independent from both the training and the testing data) that was used to determine if the model was overfitting. The best performing model on the validation data for a particular network was used as the final network for testing. |

Results

| Model | Accuracy | Precision | F1 Score | Recall |

|---|---|---|---|---|

| AlexNet | 89% | 90% | 88 | 86% |

| VGG | 92% | 95% | 92 | 89% |

| InceptionV3 | 89% | 92% | 89 | 86% |

| Resnet | 88% | 88% | 88 | 88% |

Qualitative Results and trends

True Positives (Violent classified as violent)

As we can see from the examples below, these are images in which violence is very apparent in each frame. Each image has two or more people engaged in a physical fight. The fight is not occluded and therefore, our model is able to classify it accurately.

|

|

True Negatives (Non-violent classified as non-violent)

These are examples of different types of non-violent images our model might encounter in the real world. Scenes from movies and news clips. People are engaged in a conversation or cheering for their favourite sports teams. This proves that our model does not simply classify images with people in close proximity as violent and thus proves that the model has learned useful characteristics in differentiating violent and non-violent images.

|

|

|

False Negatives (Violent classified as non-violent)

There were two major categories within the set of false negative images.

- Since we extracted frames from a video, there are certain images which are non-violent but have been labelled as ‘violent’ in the ground truth. These images increase the false negative rate in our model. One of the post-processing steps that we could consider would be to use a majority voting decision for all frames of the video, for our particular dataset.

|

|

|

|

- Another category of false negatives arise from the presence of occlusions or when images in themselves are not violent but as a succession of images demonstrates a violent intention.

|

|

|

|

For example, in the first row of images, due to occlusion and blurring the model is unable to identify it as a violent image. The second row shows cases of violence where a particular image in itself may not contain enough characteristics to be called as violent but it is true that the series of images demonstrates violence or that there is potential violence that could follow (for eg., based on the setting in the image on the bottom right, we might be able to extrapolate that there was violence demonstrated at some later point in time)

False Positives ( Non violent classified as violent)

We noticed a few interesting set of cases for false positive images.

In the first row, we suspect that the presence of a crowd in the left-most image is leading to it being classified as violent. Similarly, the positioning of the players in the right-most image seem similar to the common setting in most violent scenes.

The images in the bottom row were interesting candidates of false positives. We would need to analyze the salient regions using grad-cam visualizations in phase 2 to see what aspect of the image could be causing the misclassification. These are the kind of incorrect classifications which could be easily overturned by a human moderator (or for users, help them understand better and raise an appeal to overturn moderation decisions) if they were able to see what regions were contributing to the misclassification. Further, we believe this should help us train the model better by providing better examples that allow the model to learn better.

|

|

|

|

|

|

Conclusion and future work

Accuracies above 88% were observed using the proposed approach on all 4 models, with a highest accuracy of 95% and highest recall of 89%. Qualitative results demonstrate cases where the models are able to correctly classify a range of violent images as violent as well as specific cases where the classifier has not been able to detect violence correctly with potential explanations for the same.

In phase 2, we intend to integrate Grad-CAM to visualize and explain the results to complete what would make for a minimum viable robust, explainable content moderation system.

In addition to the above, we intend to explore if we can automatically replace salient regions identified in violent images with a blurred version of the region as a means to potentially auto-moderate the image.

We also intend to test our model with varying degrees of violence (eg. replacing two people fighting with an entire mob) to note its performance and investigate the salient regions that get returned for images classified as offensive.

Team

Shalini Chaudhuri ⋅ Rohit Mujumdar ⋅ Sushmita Singh ⋅ Sreehari Sreejith